I am writing a piece for the print media about scientists and science blogs, and I am running across some interesting numbers that I thought I'd share with you (with many thanks to my friend and colleague, Bob O'Hara, for his advice and help);

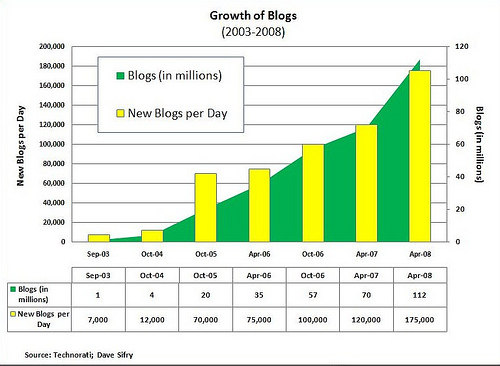

According to Technorati and Dave Sifry's reports, as of April 2008, 175,000 new blogs appeared in the blogosphere daily;

Image: Adam Thierer, based on Tachnorati data and data collected by Dave Sifry.

(I'll guess this rate of new blogs appearing has increased since then).

Here's an interesting counter; according to a running total, based on Technorati data, as of today at 1209 pm ET, there are 213,143,930 blogs out there. This counter adds a new blog every two seconds to the count, for a total of 175,000 new blogs every day.

But how many of those are SCIENCE blogs, written by scientists? To answer this question, I am defining "scientists" as those who have a PhD in science and who write about science on a blog. Any ideas how I can answer this question? I did a Technorati search, using "Scientist PhD" and turned up only 2,028 blogs, at least some of which have embedded a video entitled "Scientist PhD" on it (therefore, they all probably do not fulfill my criteria). Technorati's blog topics page only yields roughly 70 science blogs -- but I know there's more than that out there!

- Log in to post comments

A rough lower bound:

So wrote Laura Bonetta in May 2007.

Bora Zivkovic was quoted as estimating the figure at 1,000 to 1,200, back in May 2007.

(I posted a comment to this effect a moment ago, but it looks to be caught in the ol' spam queue.)

That was the time when a single person could still collect them all. Not any more - the numbers have gone up exponentially since then and I do not know how to track them any more.

Ah, damn. I did suggest simply looking at Bora's blogroll. But if not even that's going to work, we're in trouble.

Check out the discussion on FriendFeed.

v.v. difficult, but I'd still put it at between 1,500 and 2,000. When doing my study, I only used a subset of these, ~1,200. I also limited it to blogs written in English. I did allow non-scientist who publish amateur science blogs (like bird watchers and sky watchers). Certainly searching on "scientist" isn't enough -- you need to search on biologist, chemist, physicist, cosmologist, astronomer.... and on and on.

This is problematic.

1. Just because the blogger is a scientist does not a science blogger make.

2. How much science should the science blogger include to be acknowledged as such? Despite the headline, Pharyngula journal (while maybe interesting for other reasons) is about 5% Evo-Devo or any science at most. I would not call it a science blog at all.

indeed, sara. i can name a few science blogs that would NOT qualify as science blogs using my criteria, so i need to modify those so i am including as many SCIENCE blogs as possible.

We had this discussion almost three years ago, but it is not a bad idea to revisit this every year or so as the scenery changes.

The next question is whether a site with mixed content and high throughput (like Pharyngula, Greg Laden's blog, Phil Plait's Bad Astronomy, etc.) has a comparable amount of science to a more restrictive blog with a lower firing rate. . . didn't somebody look at that?

Trying to count science blogs is like trying to count earthquakes: whatever number you get won't be meaningful unless you also specify the magnitude range you've chosen to survey.

indeed, blake. counting science blogs is challenging because most scientists write about other topics besides science, so that means i have to make some carefully defined criteria to reliably identify them while simultaneously excluding spam blogs and anti-science blogs. i guess this is a number that can only be agreed upon by consensus, especially since we are discussing a moving target anyway.

An interesting way to estimate this might be to use capture - recapture methods. The simplest version is the kind used by ecologists. They mark some catches, drop them back in the lake, and then fish at random to see how many tagged fish they catch. They use this to estimate the number of fish in the lake. What you need to do this is two independent sources of information. Sample the first list (e.g., Technorati) and "mark" the science blogs. Then sample the second list (e.g., Google blogs or blogpulse) to see how many of the marked blogs are "recaptured." By looking at the number caught in both samples (recaptures) compared to the ones caught in a single sample, you estimate (by various methods) the number not caught in either sample (i.e., the total population). Here's a link that describes some of the models.

I'm not a capture recapture expert so maybe someone else has an easy way to do this for science blogs.

Interesting problem, without an easy answer.

Using Technorati may skew the results a bit because I learned that they only index blogs hosted on blogspot.com if the owner "claims" it. That means that many bloggers who aren't Technorati savvy aren't listed there*. Also, I've found that bloggers often don't write about their "PhD"s, but do identify themselves as post docs or faculty members in their profile or "about me" page, which unfortunately doesn't show up on Technorati. And, of course, some science bloggers don't mention their educational status at all. I honestly don't think that there's an easy way to determine that information short of actually looking at each blog.

I would argue that the actual content of the blogs is a better standard anyway, since limiting "science blogs" to those written by Ph.D. scientists excludes what I think are good science blogs by graduate students and other non-PhDs who are working scientists (like erv here at Science Blogs) and non-scientist science writers (like The Loom).

* This was a problem when I hosted the scientiae carnival. Several of the submissions using Technorati tags were overlooked because Technorati did not actually index those blogs.

There's been a session at the recent Joint Statistical Meetings about statistics with blogs and online networks; see http://www.amstat.org/meetings/jsm/2008/onlineprogram/index.cfm?fuseact…, and may be contact the authors for ideas. Generally you will have issues with measures of size and analysis weights: how frequently messages are posted, how many people read the blog, etc. Sampling networks is yet another interesting issue, but for that one, there should be some literature around: epidemiologists have been interested in that kind of stuff for a couple decades by now, so there's been some progress made on that.

Mark Handcock, the discussant on that JSM session, is probably the best authority on network and methods of their analysis.

My blog engine is livejournal.com, by the way. But my blog is not solely about research, unlike say Gelman's, although I do mention some academic problems (mostly bureaucratic ones though :)) from time to time. Livejournal has the idea of communities -- usually that's where people post questions with the hopes of somebody hinting an answer, and I am a member of a dozen or two on statistics, mathematics, social sciences, public health, you name it. I don't know if that is going to count for you as a science blog. Or any of those "HELP ME WITH THE HOMEWORK!!!" groups on facebook :))

cc: to Bob's email

As for Bora Z's statement that scientists have been slow in blah-blah-blah... well again there was a session about Wikipedia: http://www.amstat.org/meetings/jsm/2008/onlineprogram/index.cfm?fuseact…. As soon as the tenure requirements in a typical research university will say, "Publish k papers a year (k=0.5-1 in economics, k>5 in medical sciences), publish 20 updates on Wikipedia on your research topics" -- gosh, Wikipedia will make all research journals obsolete in a year. And if you look into the intranets of American universities, all instructors use blackboard, which is a 3rd party tool for setting up almost all aspects of university courses -- some faculty teach 100% online courses using it. So give me a good definition of "online tools" to be working with -- that's what technocrats are disgustingly terrible at compared to social scientists... who are disgustingly terrible with math though :)

And on the other hand, many "amateurs" are willing to approach science more aggressively than those who are working in science as a day job. Also, I think that ambitious but practical students of M.S. programs should not necessarily be excluded if they are writing about science.

Female Science Professor lives very much a "scientific life," but she admits that her blog is not science at all. Notwithstanding, her approach to all problems (even on the social/administrative side) is so scientific! So I would not know whether to qualify her or exclude here, honestly. She does not review articles, but she promotes a lifestyle.

Science is such a culture, and therefore, science blogging is difficult to define.

If one is really interested in finding them all, why not combine the above methods (searches, catch and release, personal blogrolls), throw them all into a database or blogroll dedicated to the purpose at hand, and allow everyone (or a select group) plow through them to determine their validity as a "science" blog (using defined criteria). Allow the group to submit and cull at will. My guess is that actual addition or subtraction from the list would need to be restricted to a few trusted people.

A bit of work, to be sure, but it seems like this distributive delegation of work could get the job done in weeks with a good number of volunteers.

Or perhaps this is an incredibly dumb and naive idea.

Irradiatus -- this is an excellent idea. but my deadline rapidly approaches.

although the data generated by volunteers could be used for a more rigorous study .. one that is publishable in the primary literature (i'd love THAT!). i'll bounce this idea off a few colleagues and see what they think.

We try and track as many 'hard science' blogs - written by a working scientist, mostly about science - at NPG as possible. We've definitely missed some but get most and track around a thousand, so I'd second Christina's estimates (1.5k -> 2k for English language blogs).

Euan -- according to your criteria, my blog would not qualify as a science blog!

i think that a science blog is not the exclusive providence of a "working scientist" since there are more than a few blogs written by science undergrads, grad students, and science writers and science journalists, not to mention all the postdocs out there (are they considered "working scientists" even though they are actually apprentices who don't have their own lab?) and even PhD scientists who have jumped through all the hoops but who are not working as scientists at this time. as i said, my goal is to include as many blogs about science as possible, not to exclude them because my criteria were excessively restrictive.

you could try a mathimatical formula to get a good idea of how many there are.

get a top 50 list of search engines and do a search in each one...then take all the results and cross reference them and exclude the percentage of NON relating results...then take the acual total you come up with and cross reference the total with an estimate considering there are way more then 25 search engines out there...

example:

x= total blogs

(average results of top 50 search engines)=(1.2 million - 400k nonrelated) * (the acual number of search engines)= x

i think it would be more accurate then a catch release attempt...or any other. good luck.