Recently, a paper published in a Chinese journal of science by Monckton, Soon and Legates attracted a small amount of attention by claiming that climate science models "run hot" and therefore overrepresent the level of global warming caused by human greenhouse gas pollution. The way they approached the problem of climate change was odd. The Earth's climate system is incredibly complex, and climate models used by mainstream climate scientists address this complexity and therefore are also complex. Monckton et al chose to address this complexity by developing a model they characterize as "irreducibly simple." I'm not sure if their model is really irreducibly simple, but I am pretty sure that a highly complex dynamic system is not well characterized by a model so simple that the model's creators can't think of a way to remove any further complexity.

The same journal, Science Bulletin, has now published a paper, "Misdiagnosis of Earth climate sensitivity based on energy balance model results," by Richardson, Hausfather, Nuccitelli, Rice, and Abraham that evaluates the Monckton et al paper and demonstrates why it is wrong.

From the abstract of the new paper:

Monckton et al. ... use a simple energy balance model to estimate climate response. They select parameters for this model based on semantic arguments, leading to different results from those obtained in physics-based studies. [They] did not validate their model against observations, but instead created synthetic test data based on subjective assumptions. We show that [they] systematically underestimate warming ... [They] conclude that climate has a near instantaneous response to forcing, implying no net energy imbalance for the Earth. This contributes to their low estimates of future warming and is falsified by Argo float measurements that show continued ocean heating and therefore a sustained energy imbalance. [Their] estimates of climate response and future global warming are not consistent with 29 measurements and so cannot be considered credible.

The Monckton model does not match observed temperatures, and consistently underestimates them. We don’t expect a model to perfectly match measurements, but when a model is wrong so much of the time in the same direction, the model is demonstrably biased and needs to be either tossed or adjusted. However, you can’t adjust an “irreducibly simple” model, by definition. Therefore the Monckton model is useless. And, as pointed out by Richardson et al, the basic values used in the model were badly selected.

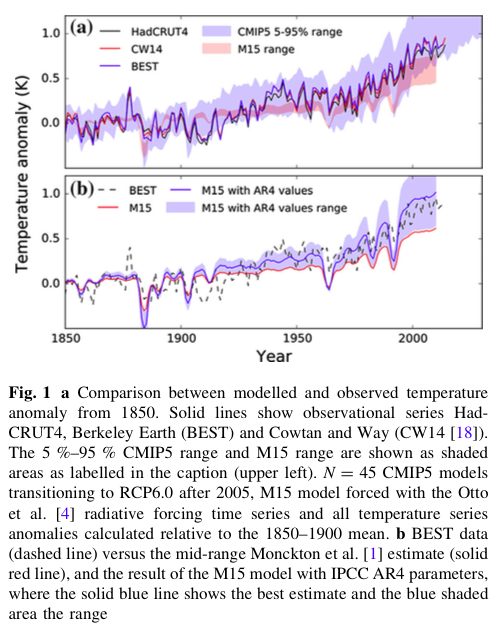

Figure 2 from Richardson et al demonstrate the problem with bias. The pink band in the upper figure and the red/pink line in the lower figure show the Monckton model tracking across time from 1850, compared to several sets of actual observations. The irreducibly simple model may not be irreducibly wrong, but it is irreducibly useless.

Monckton et al rely on the assumption that the Earth’s surface temperature varies by only 1% around a long term (810,000 year) average. This “thermostasis”, they argue, means that there are no positive feedbacks that move the Earth’s temperature higher. This ignores the fact that for that entire record one of the main determinants of global surface temperature, greenhouse gases, has also not varied from a fairly narrow range. But now human greenhouse gas pollution has pushed greenhouse gas concentrations well outside that long term range, and heating has resulted.

The Monckton model is contradicted by observation of global ocean heat content. However, recent Argo measurements of ocean heat content indicate significant warming over the last decade.

During recent years, the rate at which global mean surface temperatures have gone up has been somewhat reduced, and various factors have been suggested as explanations. Of these explanations, Monckton et al. assume only one of these to be true, specifically, that the climate models used by all the other climate scientists are wrong. They ignore other very likely factors. Monckton et al state that models used by the IPCC “run hot” without any reference to the fairly well developed literature that examines differences between observed temperatures and model ranges. They also misinterpreted IPCC estimates of various important feedbacks to the climate system.

I asked paper author John Abraham if it is ever the case that a simpler model would work better when addressing a complex system. "While simple models can give useful information, they must be executed correctly," he told me. "The model of Monckton and his colleagues is fatally flawed in that it assumes the Earth responds instantly to changes in heat. We know this isn't true. The Earth has what's called thermal inertia. Just like it takes a while for a pot of water to boil, or a Thanksgiving turkey to heat up, the Earth takes a while to absorb heat. If you ignore that, you will be way off in your results."

I also contacted author Dana Nuccitelli, who recently published the book "Climatology versus Pseudoscience," to ask him to place the Monckton et al. study in the broader context of climate science contrarianism. He told me, "In my book, I show that mainstream climate models have been very accurate at projecting changes in global surface temperatures. Monckton et al. created a problem to solve by misrepresenting those model projections and hence inaccurately claiming that they "run hot." The entire premise of their paper is based on an inaccuracy, and it just goes downhill from there."

This is not Nuccitelli's first rodeo when it comes to the Monckton camp. "It's perhaps worth noting that these same four authors (Legates, Soon, Briggs, and Monckton) wrote another error-riddled paper two years ago, purporting to critique the paper my colleagues and I published in 2013, finding a 97% consensus on human-caused global warming in the peer-reviewed climate science literature," he told me. "The journal quickly published a response from Daniel Bedford and John Cook, detailing the many errors those four authors had made. There seems to be a pattern in which Monckton, Soon, Legates, and Briggs somehow manage to publish error-riddled papers in peer-reviewed journals, and scientists are forced to spend their time correcting those errors."

Monckton et al cherry-picked the available literature, thus ignoring a plethora of standing arguments and analysis that would have contradicted their study. They get the paleoclimate data wrong, ignore over 90% of the climate system (the ocean), selected inappropriate parameters, and seem unaware of prior work comparing models and data. Monckton et al also fail to provide a useful alternative valid model.

Monckton et al failed in their attempt to demonstrate that IPCC estimates of climate sensitivity run hot. Their alternative model does not perform well, and is strongly biased in one direction. They estimate future warming based on “assumptions developed using a logically flawed justification narrative rather than physical analysis,” according to Richardson et al. “The key conclusions are directly contradicted by observations and 450 cannot be considered credible.”

Also of interest

Aside from the obvious and significant problems with the Monckton et al paper, it is also worth noting that the authors of that work are well known as "climate science deniers" or "contrarians." You can find out more about Soon here. Monckton has a long history of attacking mainstream climate science as well as the scientists themselves. To be fair, it is also worth noting that two of the new paper's authors have been engaged in this discussion as well. John Abraham has been eDebating Monckton for some time. (See also this conversation with me, John Abraham, and Kevin Zelnio.)

Author Dana Nuccitelli is the author of this recent book on climate science deniers and models.

"All models are wrong. Some are useful."

But not this one.

The term "irreducibly simple model" isn't even meaningful. Their model *could* be simplified, but there's no point because it's already too simple to describe a high-order lumped-parameter system.

Ya think?

I make this point from time to time but it is often not well received: You can't let the Denialists frame the issue.

Seriously, knocking down one more zombie attempt to question the energy imbalance-- which is what these claims about mean surface temperature all are-- is, in fact, letting them waste precious time, and keep that meme alive.

Scientists should be (and some are) trying to improve data collection, and short term and regional prediction and projection. Public policy is not going to be driven by equilibrium sensitivity calculations. It will be driven by linking concrete phenomena that people experience-- drought and flood and heat and cold and so on-- to that imbalance.

"Monckton et al rely on the assumption that the Earth’s surface temperature varies by only 1% around a long term (810,000 year) average."

So, they basically assumed a result and built a "model" around it? Am I missing something?

The term “irreducibly simple model” isn’t even meaningful.

I can think of a sensible definition for the term: a model with zero free parameters would be "irreducibly simple". But I don't think it means what Monckton et al. think it means. And situations where a model with zero free parameters is actually useful are rare; atmospheric circulation certainly isn't one of them.

Monckton et al rely on the assumption that the Earth’s surface temperature varies by only 1% around a long term (810,000 year) average.

That would be about 3 K. Some of the ice age excursions were close to that level, but there have been larger excursions than that in geological history, e.g., tropical plants once grew at what today are subarctic latitudes, and further back there was a "snowball Earth" period. So this assumption seems to be false. They might fare better if they require something close to the current continental configuration (IIRC global climate became significantly colder once the isthmus connecting the Americas developed), but then that would not be an irreducibly simple model--and they also have to ignore the extreme forcing of CO2.

Here's an even simpler model:

total surface warming = 1.5 C per trillion tons of carbon emitted, with 5-95% confidence levels of 1.0 and 2.1 C.

https://andthentheresphysics.files.wordpress.com/2015/03/cumulativeemis…

"The proportionality of global warming to cumulative carbon emissions," H. Damon Matthews et al, Nature v459, 11 June 2009, pp 829-832.

doi:10.1038/nature08047

I'm glad to see this paper finally published.

However. figure 1a shows that the model is useful, as long as the parameters are properly set. This is a Nit pic I know. but it is important to separate modelling errors from parameter setting errors. For example, I can make a GCM look like crap by fiddling with certain parameters. The model is useful when the parameters are set using good methods.

The figure shows that the model is completely wrong.

Hi Steven,

Figure 1b shows the M15 model with the parameters they estimate for the IPCC. We pointed out in our paper that the model did much better when using parameters inferred from the IPCC. We then used linear regression to estimate the product of feedbacks and transient fraction, showing that the Monckton assumed value was outside of the confidence intervals. There's also some discussion in there about the complexities of response time and how this should be considered to get useful things out of the EBM approach.

We ended up using "M15 model" to refer to "M15 parameterisation of the energy balance model" because it was shorter and we had to say it a lot!

Hi dean @ #4

Hi dean,

"So, they basically assumed a result and built a “model” around it? Am I missing something?"

Sort of. They looked at the temperature record from ice cores and decided that it looked stable. They then said that if an electronic engineer were to build a stable circuit, then they would choose a circuit with a certain gain factor. Therefore the climate has this effective gain factor.

Bigger values of the gain are still "stable", but Monckton et al. chose a range that gives a small warming. Because they say that's how circuits are designed.

Researchers have used the same palaeoclimate data and they actually calculated values relevant for climate change. The results from palaeoclimate studies contradict the Monckton team's assumptions. I can only guess that the Monckton team didn't know about these studies or maybe they forgot to cite them and explain why their assumptions differ from measurement-based calculations.

@12: Hmmm. Even my sophomore stat students know better than that. I asked simply because I couldn't believe people would do something so blatant.

'You can find out more about Soon here'. All you need to know about the tone of the 'debate' on this site (and see the ferocious response to Mosher's mildly-expressed comment, which should have raised questions. But didn't)