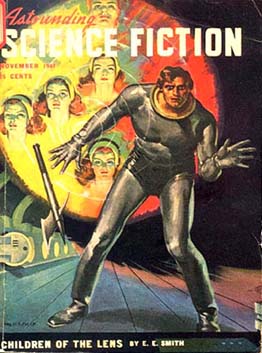

There is a trope in classic science fiction, where humans are "special".

We get out there, into the galaxy, and there's a bunch of aliens, and they're all Really Dumb.

So the clean cut heroic square jawed human takes charge and saves the day. Fini.

What if it is true?

Ok, I've been reading too much Alastair Reynolds recently, trying to catch up on the Conjoiner/Inhibitor series, but there is a serious issue here in looking to resolve the Fermi Paradox.

The basic issue is simple: there are a lot of planets; we think a significant fraction of these are habitable (in the narrow sense of having liquid water for extended periods); a lot of people suspect that life arises rapidly whenever it can (sorry Grauniad - don't know where you got that one from); and, suitably defined, "intelligence" is adaptive and ought to arise not infrequently and evolve to beings with more intelligence.

Now, any of this could be wrong, we need data; in the meantime, we're theorists dammit, so we may speculate.

There are three "obvious" resolutions to the Fermi Paradox - that communicating intelligence arises very rarely, that it is very short lived when it does arise, or that we are not getting the message.

The short lived hypothesis has sub-categories: that most environments are too unstable (this can also explain rarity of occurrence) and kill-offs are severe; or, there are Berserkers/Inhibitors which deliberately kill intelligences; or, intelligence is mal-adaptive and self-destroys, possibly destroying or severely damaging planetary ecosystems in the progress.

All explanations are plausible at some level, except for the universal problem of exceptionalism (it only takes one lucky intelligent civilization to live long and expand to make the Fermi Paradox a severe problem), and all vary in popularity with social and political factors.

So, in aggregate, in our one observable system, we see over long periods slow increase in neural mass and connectivity - individual species may find it adaptive to shed metabolically expensive brain matter as things change, but there is some overall secular increase in the hardware we think is needed for intelligence.

Now, recently we've had serious suggestion that our particular level of intelligence was produced when alleles for large brains introgressed fom Neanderthals relatively recently, see Neanderthals and human brains.

This plausibly lead to a large change in mean "intelligence", and was strongly adaptive.

Introgressions are not rare, but how rare would a jump in neuron capacity of that size be just at this stage? If it is rare enough, humans may have jumped over the "smart enough to be dangerous, stupid enough to not realise" stage.

Or not.

What do I mean by that? Well, humans clearly can destroy ecosystems, and we are in fact over-smart for that capacity. We could kill ourselves off and that possibility of that occurring has been a long term concern.

We also can see how to leverage what intelligence we have to become smarter. Even without worrying about transcendence, we technologically leverage our effective intelligence (a person with a computer, or a computer and net connection is in some ways functionally more intelligent that the same person alone - more memory, rapid data access, sorting and numerical computing capability).

We can also see in principle how we might likely engineer human intelligence; we will soon understand the effect of allele variation on brain functionality and start to understand how to experiment with additional variations - brain engineering - this is also independent of whether we should do so, and not taking into consideration science fictional concepts like direct electronic enhancements of brain capabilities or qualitative re-engineering of brain structure, or artificial intelligence.

We may never get over the threshold of direct brain engineering, we may die off first, but we can conceive of the possibility. Just.

So, how rare is that? 'Cause if "we" were collectively a little bit stupider, this might be inconceivable. We might be smart enough to do damage, smart enough to kill ourselves off, and cause mega-extinctions, but not smart enough to get over ourselves.

My sense is that if we were a little bit dumber, this is in fact what we would do, and I worry that collectively "we" are not smart enough as is.

Just maybe, this is a major component of any resolution of the Fermi Paradox - intelligences do arise, they are adaptive, but gradual increase in intelligence leaves species smart enough long enough to do damage, but not long enough to adapt further, and not smart enough to engineer their own salvation.

Clearly this is amenable to observation - we just need to find a large sample of habitable planets, see how many have archeological traces of half-assed civilizations and estimate how they collapsed to total destruction through various stupid routes.

So, a testable hypothesis.

It could also be numerically simulated very simply - we would just need some quantitative parametric measure of intelligence, the typical adaptive step per (long) time interval, and an estimate of the width of the danger zone - where species are smart enough to do damage but not smart enough to get out of trouble.

It seems like there is an interesting and plausible parameter space where the above conjecture holds, and that the human adaptation of relatively high intelligence (we wish) is rare enough to be interesting. It'd have to be more than a one-in-a-million outlier to be interesting, I suspect.

Ah well, such speculation is what fridays are for.

I think it was Geffrey Landis that did some statistical models assuming only 1) that there was no magic space travel mechanism 2) that civilizations had a finite lifetime when they were interested in space travel (a real long lifetime - millions of years) 3) a finite lifetime where there would be enough surplus energy to waste on startravel. and came up with the fact that we would probably be the only ones around in the galaxy interested in space travel right now, and that odds are no one else had ever come here. I forget how many intelligences he assumed but it was non-trivial. Space and time are big. The papers I believe were published in the Journal of the British Interplanetary Society maybe 5 to 10 years ago or so. Its been a long time since I looked at them, and I could be misremembering. The model was fairly simple, and really just based on the fact that it takes hellish amounts of energy to travel from star to star even at 1% of the speed of light. Would civilizations really care for the length of time needed. Even the high-sigma explorers didn't cut it.

That's interesting, Markk. I like it. Most analyses are so simple minded about extending 20th century thinking across billions of years. Chances are that lifeforms live at that level only briefly, as we ourselves will since we will probably either die out or rapidly alter our reality with the advent of true AI. And we are the only model we have. Looking for 20th century signals in the sky seems completely futile, really, as such wavefronts are unlikely to cross our path in this century. The future AI earth, if we survive, will probably look upon such signals from alien apes as being totally uninteresting. And this holds even if we have the capacity for superluminal travel.

Even without advanced technology, biological or AI, reaching a certain point in civilization can create a pretty significant bump in intelligence. Just today a read a brief which claims that simply giving kids toys improves IQ by 9points. Add in better nutrition, the developement of writing and publishing and schooling, and the bump from the transition from primative to civilized could be pretty significant -even with no short term change in genetics.

I think Kea has a point. Unless aliens want to contact potential future developing civilizations, they are unlikely to be beaming radio signals at stars with earthlike planets. Also if you believe the arguments in the book rare earth about needed conditions, planets with stable enough climate for the roughly 3e9 years needed to develope may be very rare indeed. Perhaps only a couple per galaxy. certainly the sci-fi shows with dozens of advanced civilizations with a hundred LY radius would seem to be preposterous.

Unfortunately, the case that greater intelligence improves survival odds (for civilizations not individuals) isn't very compelling. Perhaps there is no real gain, i.e. the odds of a species making could be tiny indeed.

Kea has a good point as always. If we did run into ET's, since our civilisation is so young and the Universe 14 by old they will likely be millions of years more advanced. As for communication, we can't understand dolphins on our own planet very well.

The "Beyond Einstein" posts are fun to follow. Steinn may enjoy this Town Hall announcement. Like Thunderdome, one walks out.

"The National Aeronautics and Space Administration (NASA) and the Department of Energy (DOE) requested that the NRC assess NASA's five Beyond Einstein missions and recommend ONE for first development and launch."

I always thought that was one of the interesting weak points of older editions of Dungeons and Dragons too -- humans may have had the shortest lifespans, but they also had the most character development flexibility, which I thought didn't really make any sense.

Interestingly, I don't think the problem is as bad in some of the better-known pop science fiction, where as often as not humans predominate solely because of makeup and SFX costs, but are otherwise presented as part of a community. Babylon 5 was probably the best example of that, and in fact I actually remember a bit of a turnabout bit from the 5th season where it was revealed that the Drazi often hired humans, not as scientist or anything so dignified, but as hired muscle for security jobs and the like.

Fredric Brown's 1949 "Letter to a Phoenix" is another angle on this worth remembering -- that the galaxy may produce lots of stable societies and species, which eventually stultify, and that only H. sapiens manages to utterly trash and burn our civilizations every so often and so the survivors have the opportunity to claim what's left and rebuild, better, from the ruins each time.

It's a chilling idea.