Julia is a nifty new language being developed at MIT

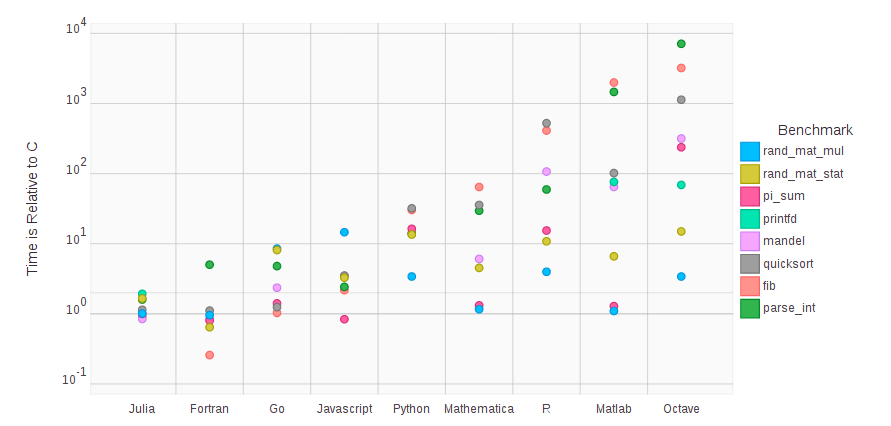

I stole this plot from github, it shows Julia's current performance on some standard benchmarks compared to a number of favourite tools like Python, Java and R. Normalized to optimized C code.

And, there, in a single plot, is why Real Programmers still use Fortran...!

A nitpick: Javascript != Java

fair point - compiled java ought to do factor of few better on some benchmarks than the script does

The author of Mathematical Recipes once said to me "I don't know what the future computer programming language for science will be but it will be called Fortran"

The Javascript V8 engine (the one built into Google's Chrome browser) can be significantly faster than Java depending on the circumstances. (There is lots of debate about that to be found on the web.) Thank you for the heads up about Julia.

Actually, the benchmark says that Fortran is merely average.

All the numbers, with the exception of the Fibonacci series cluster around the C values. And since the Fibonacci implementation is purely recursive (and, honestly, pretty bad), it might well be that the compiler just makes the smarter decision about which path to follow by default here.

Second, the benchmark tests the performance of low-level features to show that Julia performs well here, in contrast to scripting languages. It has no further validity.

As an example, the Fibonacci benchmark is implemented recursively, which is something you would never do in a scripting language because function calls are really expensive there. Not surprisingly, it gives about the worst speeds in all scripting languages.

In contrast, if you look e.g. at the Matlab values, those benchmarks that measure real performance of the underlying system, or contain loops that can be easily vectorized (pi_sum, mat_mult) are about the C level. Which tells you that Fortran does not really excel, but is about average as one would expect intuitively.

I will restrain myself and not continue bashing Fortran for the lack of good libraries and stuff, but your claim is simply wrong. The benchmark by design does not show that Fortran is superior.

The reason is pretty simple. If offers c-like times (order of magnitude) and coding it's simpler than Cs as long as your problem lacks complicated derived data types, and is restricted to array operations. Many problems solved today fit those restrictions.

Julia is not suited for HPC over a MPI supercomputer, as far as I can tell, at least, in it's current state of development.

So my question is, Why it should not?

Hmm: the benchmarks say that there is a four order of magnitude variation in the performance of compilers for widely used and popular languages for simple core computational tasks.

Despite the fact that commodity computing is now a near monoculture and most of these compilers as a first approximation cross-compile into something like C object code and run under *nix kernel running on a very predictable CPU

I would have expected the compilers to all cluster near 1 for these benchmarks, with NONE outperforming C and some underperforming by a factor of few depending primarily on how dynamic the structure was.

Fortran is average in the sense that it is the extreme outlier, that is the ONLY one of these languages to significantly outperform C on any of these computational tasks, and only underperforms significantly on the one obvious one. Despite the fact that this is the GCC fortran compiler!

This tells me that my naive prejudice is right: which is that Fortran is still optimal for certain arithmetic intensive scientific computing tasks; and is still mediocre at I/O and suboptimal at bit level manipulation of strings. As always.

It also tells me that most of the newer languages are still unsuitable for CPU intensive scientific computing, by orders of magnitude, even if they are convenient for low intensity computing where manhours are more valuable than CPU hours.

After 50 years, there is still a large class of numerical computing where the only way to beat Fortran is to hand optimize code at the machine level.

I have survived far too many computational theological skirmishes to make any claims of superiority of anything over anything else (other than Mac vs PC natch, of *nix vs M$, or TeX vs anything or...). Choose the tool that suits the task. This tells me what Fortran is (still) suitable for. For me.

Julia is looking like an interesting candidate for a viable future computer language candidate and possible Python replacement - I am somewhat underwhelmed by Python. Julia is in release 0.2 I believe - we'll see how it look as release 0.9

As for libraries - I would argue that the main reason Fortran survives is not in fact the speedup, but the legacy code - especially the large libraries of verified and validated subroutines of scientific and mathematical tools available out there, but also some open source ready-to-run out of the box code that is useful. In fact a fair amount of the useful scientific C code is either translated from legacy Fortran, or is C main wrappers around thinly disguised Fortran subroutines -but I digress...

For whatever mostly accidental legacy reasons the defacto rules for Fortran allow compilers to make pro-optimization choices. In C it is hard to disambiguate memory pointers: "if I have two statements x=y followed by say a=b, can I safely change the order I do them in? You have to know if the address spaces of any of these numbers overlap. Thats usually quaranteed in Fortran". Also the Intel Fortran compiler does a pretty good job, modern X-86 processors have vectorlike units, which can perform several times faster, but the compiler has to be able to figure out that loops -or loop fragments can safely vectorize. Also alignment of stuff in memory is very important, loads and stores that span word or cache line boundaries are inefficient. Again, with Fortran you can have common blocks aligned properly (and the compiler is aware of this).

A few years back, I played some bit crunching games, stuff like the popcount (number of one bits), in an array of 1000 64bit words, and Fortran clobbered C!

Well, I'd actually claim that Fortran rules are quite deliberately chosen to make certain optimizations more optimal at the expense of a less elegant and flexible language (barring illegal deliberate overrides of common blocks and other fund games). C's choice of how to handle pointers makes optimization harder for many tasks - it would be easy to change this, or have compile options which made trade-offs, but these are not made because the maximizing numerical throughput is not the overriding optimization concern for C design. If it were, it'd be F95. ;-)

If you really need to do cpu limited bit operations and C is not fast enough then go to machine level...

I will purely restrict this post to the benchmark and grind my teeth not to argue further against Fortran.

The benchmark is set up on purpose to test the speed of low-level language features, such as function calls, for loops etc. The test cases are heavily geared towards that without even the simplest optimizations. This has three consequences:

1. _Any_ reasonably low-level language with a decent compiler will produce approximately the same speed. So the speed of Fortran, C, C++, even Basic, Pascal, Java etc. will be about the same. Go, if I recall correctly, simply does not have a good compiler.

2. All interpreted languages (all examples except Go and Julia) have made deliberate tradeoffs. They are slower in handling low-level features like function calls, but more flexible/easy to use. That is why you do not program in an interpreted language like in a low-level language; instead you use functions provided by the respective standard library (this is coding 101). Consequently, it is completely unsurprising that the interpreted languages are slower.

3. Commenting on how bad the other languages (except Fortran and Julia) are is pointless, these languages have been deliberately misused in this benchmark. When they have been used correctly (like the multiplication case for Matlab and Mathematica), they give similar performance to low-level languages, which again is not surprising.

Another post, because there are two major errors in the followup posts:

1. C is faster than Fortran. Iff you know how to optimize. The simple reason is that C allows more fine-tuning. For example, the pointer problem mentioned by Omega can be overridden by a keyword that tells the compiler "The values at this pointer position should not be changed by other variables", leading to Fortran-like output. As an example, the linear algebra library that they used in the benchmark is written largely in C.

2. Fortran is not "safe". In fact, the pointer problem mentioned by Omega can be easily reproduced in Fortran; just pass two overlapping pointers (sub-arrays of an array) to a unction. The Fortran compiler will by default produce incorrect, but fast code, while the C compiler produces by default (without keywords) correct, slower code.

Choose the tool that suits the task.

This cannot be emphasized enough.

I frequently use C because it has certain features I find desirable. Specifically, it lets you work closely with the operating system (no surprise, since Unix was written in C). I like to have access to command line arguments, for instance. C is not the only way to do this (you can do a Bourne shell script instead), but it is one of the more efficient ways to do it. Fortran is specifically designed for numerical computation, so of course it is frequently used for that purpose. In many cases the best solution is to write a C wrapper to handle the I/O and OS level stuff, and call Fortran functions/subroutines to handle the actual mathematical details. As Steinn notes, there are many legacy libraries in Fortran, which allow coders to avoid reinventing wheels.

Somebody upthread mentioned Numerical Recipes. I have the second edition C version of that book. It is occasionally useful as a reference for basic numerical techniques, but it is also an object lesson in how not to write C code. Comments and interior spaces are wonderful things which vastly improve code readability. And speaking of comments: Their purpose is not so much to help other people figure out what the hell you were thinking when you wrote that piece of code, as to remind you what the hell you were thinking when you return to that code after three weeks or three months or three years.

In my past life in supercomputing I came to realize that in the STEM field, many endevours have pushed as far as the current computing technology will allow. This means there is often an opportunity available for those who can find some clever mechanism to push the limits of what can be computed.

Today that has often been translated into the attempt to use graphical processors to accelerate some computing tasks. But the basic X-86 CPUs contain some pretty impressive compute engines derived from the SSE technology. In the near future these will be able to process 512bits of information with a single instruction on a single core, and some chips may have 16cores. If you can pack the data bitwise and work on it in a bit parallel fashion, this means that you have access to several thousand bit operations per machine cycle per chip, i.e. many trillions of bit operations per second per chip. This can enable tremendous capabilities in certain situations. Fortran does allow you nearly full access to these capabilities.

Minor nit: javascript and java are completely different language. It's just a coincidence that they have similar names.

Yay! Just what we need. Another new programming language.

Since my last post was maybe a bit too righteous, and another day has passed, I can maybe summarize my arguments better.

I agree with Eric Lund, I am well aware that you can also write even good code in Fortran. I am aware that STEM people are often bad coders, which cause a good part of Fortrans reputation. And I have seen that these same people can write horrific C++ code.

What bothered me in the original post was the incorrect representation of the benchmark results, and the self-righteousness "Fortran is best". "Real programmers" outside a very narrow STEM field do not use Fortran, because for many applications it actually sucks (and even in STEM there are many applications are not only number crunching but also writing a big program that you can maintain, test and modify).

The real competition is not between Fortran and, say, JavaScript in a benchmark testing recursion, but between, say Fortran code and well-designed, highly expressive numerical libraries in other languages. I mostly code in C++, so the examples that I have come across are boost::odeint, or thrust, which are worlds beyond what I could imagine in Fortran.

Fortran is currently being used to get spacecraft to orbit, and will be for a long time to come (I speak with first hand knowledge).

Fortran's benchmarks are like Beckenbauer entering open trials for the German team for this summer's World Cup and not only winning the sweeper slot, but being competitive for both winger and striker and only just losing out for the goalie...

It is one thing knowing that it ought still be good for something (I actually do think Fortran would make a great coach, at least for the English team) and definitely not be the best at others (eg never make goalie for the Dutch team) but for it to be broadly competitive at all is amusing, and so apparently think the intertubes.

Though apparently the most interesting thing on the intertubes is someone lazily and hastily confusing one early 90s web oriented structured object oriented code loosely based on C, for another entirely different similarly named code structured object oriented code loosely based on C, but using a slightly different syntax and structure, but very different target audience...

java/mocha/whateva

Must remember that if I ever want to pump up traffic.

Since you're not taking this too seriously, here are some funny answers:

http://stackoverflow.com/questions/245062/whats-the-difference-between-…

If you want to pump up traffic, drop tantalizing hints about upcoming extrasolar planet announcements and rhapsodize about where the US Navy has placed its carriers. Lets go back to the good old days!

Oh, I figured out how to pump up traffic a few years ago and doing so felt too slimy to actually do it. I'd have to go too far downmarket for my tastes, and go to gee-wiz on the hype. Also too busy with my real jobs.

I actually have a real problem with blogging about sciency bits, which is that I am now an ApJ Science Editor, and I Must Not, in general, do stuff like leak interesting hints about extrasolar planets - I'm handling a significant fraction of the papers.

We haven't decided how to handle this yet, since I think the ApJ vaguely does think it might like to join the modern world in terms of communicating exciting sciency bits.

I don't think the US Navy is crazy enough to cross the Bosphorus with a carrier group...

On the other hand that might be better than me feeling like I must keep the blog alive by posting another free verse.

OTOH - Real programmers still use C for benchmark baselines.

I don’t think the US Navy is crazy enough to cross the Bosphorus with a carrier group…

Why would they need to? If they were inclined to stir up trouble over the Russia/Ukraine situation, or even be on hand as a deterrent, they could park that carrier group just south of the Dardanelles, or use NATO bases in Poland and other nearby countries. Not that I think the CinC is eager to stir up trouble, although some of the fire-breathers in the opposition are. Besides which, is the Turkish government crazy enough to allow a US carrier group to transit the Bosphorus?

Poland to Crimea/Eastern Ukraine is a long way to go... but no, there is no way Turkey would permit hostile passage of the Bosphorus, however amusing it'd be for a demonstration off Sevastopol...

I personally favour the theory that Crimea has formally reverted to Turkey now that it has separated from the Ukraine ;-)

Ulf@13: " Fortran is not “safe”. In fact, the pointer problem mentioned by Omega can be easily reproduced in Fortran; just pass two overlapping pointers (sub-arrays of an array) to a unction. The Fortran compiler will by default produce incorrect, but fast code, while the C compiler produces by default (without keywords) correct, slower code."

Sorry, but this is wrong. The Fortran language specification says that you are not allowed to do that. Therefore the compiler does not produce incorrect code, it's the programmer who broke the rules, not the user.

Language standards are a contract that cuts both ways. They are not just restrictions on the compiler, but also on the user.