When I think of molecules, I think of Conan O'Brien doing his skit where he plays Moleculo...

the molecular man! I don't think of astronomy, and I certainly don't think of the leftover radiation from the big bang (known as the cosmic microwave background)! But somebody over at the European Southern Observatory put these two together and made an incredibly tasty science sandwich.

See, we can measure the cosmic microwave background today, because we have photons (particles of light) coming at us in all directions at all locations, with a temperature of 2.725 Kelvin. Theoretical cosmology tells us that when the Universe was younger, it was also smaller. Because the expansion of space stretches the photons in it, causing them to lose energy, it means that photons were hotter when the Universe was younger.

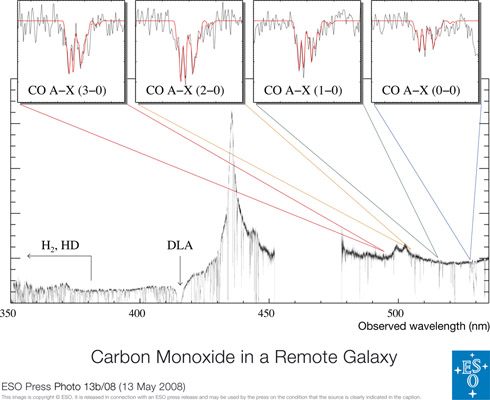

But we've never been able to measure that, of course. After all, how can you measure the temperature of something in a place where you aren't? (Hint: read the title of this post.) Use molecules as thermometers! Using a carbon monoxide molecule (CO to you chemists) in a distant galaxy, they were able to measure the temperature of the microwave background when the Universe was only about 3 billion years old! The temperature they measured was 9.15 +/- 0.70 Kelvins; and this compares pretty well with the predicted temperature of 9.315 Kelvin. Not bad! Here's an incomprehensible graph for you to look at while you take it all in:

By the way, while I've got you thinking about astronomy, NASA just announced that their X-ray satellite, Chandra, found a supernova in our own galaxy that went off in the 1800's, making it the most recent supernova ever to occur in our galaxy! Why'd it take so long to find? Because the whole damned galaxy was in the way: the explosion happened on the opposite side!